Does a New Study Prove We Should Decriminalize Drugs?

Hint: No.

Last week, WaPo’s Megan McArdle responded to my long essay in National Affairs about the drug crisis. Her conclusion is that the current situation is a good reason to legalize all drugs. I reply in City Journal (surprise: I do not agree!). But I want to zoom in on something McArdle said that didn’t make the cut in the CJ piece. She writes:

Consider that, in one large Indiana county, nearby overdoses actually rose in the wake of drug busts, according to a new paper in the American Journal of Public Health. While the researchers can’t establish causation, this is consistent with the hypothesis the researchers started with: When regular drug supplies are interrupted, users find that their tolerance diminishes at the same time that they are forced to seek out new suppliers selling products of unknown potency. The combination appears to be lethal.

The paper, “Spatiotemporal Analysis Exploring the Effect of Law Enforcement Drug Market Disruptions on Overdose, Indianapolis, Indiana, 2020–2021,” made something of a splash when it came out last month. Here’s some coverage. Here’s some more. And some more. Skim, you’ll get the idea.

Two of the paper’s authors, Brandon del Pozo and Grant Victor, have a recent piece at Harvard Public Health detailing their findings. As they explain it, they find that “in the week following a drug bust, fatal overdoses doubled within 500 meters of the event, and within two weeks of police action, first responders were called to administer naloxone to reverse drug overdoses at twice the rate, suggesting an increase in non-fatal overdoses.”

Okay, that’s sort of interesting. What do we do with that information? Here’s what they conclude:

We hope our findings, which we intend to replicate in other cities, motivate public officials to shift from drug interdiction to more effective strategies, such as opening overdose prevention centers, creating safer supply programs, and expanding availability of effective treatments for opioid use disorder, including access to medication-assisted treatment.

It is also past time to consider more broadly decriminalizing drug possession and use for a range of illicit drugs across the country, an approach that has worked in Portugal for more than two decades and is nascent in Oregon.

In other words, the authors reach the same conclusion as McArdle, and the conclusion implied by the above coverage: drug enforcement is killing people, try to do away with the “war on drugs” and try something new.

Let’s bracket the fact that the evidence supporting the efficacy of “overdose prevention centers” and “safer supply programs” is somewhere between non-existent and negative. Let’s bracket the interdiction approaches far better supported in evidence than any of this grab-bag of proposals. Let’s bracket even the claim that the Portugese model can work in the United States—the rolling disaster that is Oregon should make you think twice!

No, I want to talk about if such a strong inference is merited by the study del Pozo1 and Victor are relying on, and which they coauthored. And (again, surprise!) I don’t think it is. But the fact that it’s been so swiftly grabbed on to tells you something about how evidence gets used (and abused) in the policy-making process.

Let’s Talk About the Study

Hereinafter called Ray et al.2 I want to go through the nitty gritty, because I think it’s important to understand what we can and can’t say here.

Start with the data. To conduct their study, Ray et al. collected data from Marion County, Indiana, the home of Indianapolis and the largest county in the state. They have three sources of data: “property room drug seizure data” from Indianapolis P.D.; fatal overdose data from the Marion County Coroner’s office; and OD calls for service/naloxone administration data from Indianapolis EMS. For each data set they have date, time, and location. For the seizures, they also know the type of substance seized.

Using these data, the question Ray et al. are interested in is, basically, whether there’s a “spatiotemporal” association—a correlation in time and physical proximity—between drug busts and overdoses. Are there more overdoses than we would expect in the spatial/temporal proximity of a drug bust? Their dependent variable is the total number of ODs at a given distance in time (they report 7 days, 14d, and 21d) and space (they report 100 m, 250 m, and 500 m).

But how do we know if the number of overdoses is more than “we would expect”? What is it compared to, in other words? What they do is compare “the [count] statistic with a null hypothesis in which the 2 processes are independent, with the null distribution constructed by randomly shuffling event times […] of the drug seizures while keeping the locations and event times of the overdoses fixed.” To translate: they regenerate their count, but randomize the timing of the bust, in theory approximating what the overdose count would be if the bust was unrelated to the overdoses. They do this 200 times to generate a range of possible values to compare the observed value to.

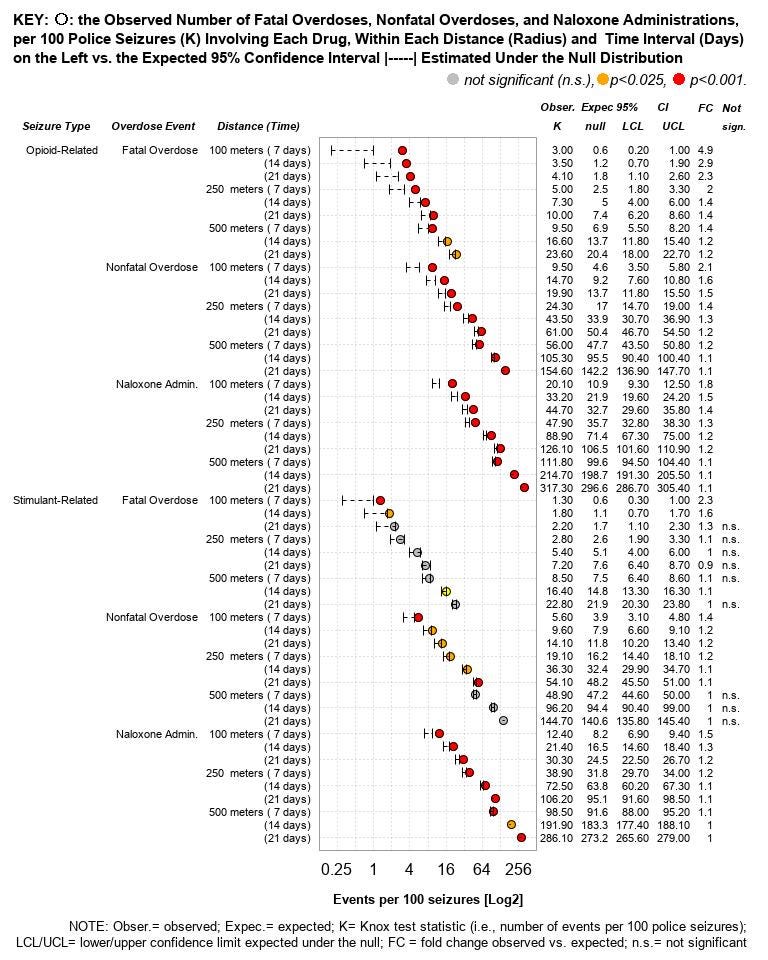

The figure above reports their initial results. The points are the observed values (on a log scale and adjusted as per 100 seizures), and the bars are the expected values. They report figures broken out by drug type and outcome type, at all of their time/space intervals, with ratios (FC = fold change) of the expected vs. observed values. Color shows the p-value of the comparison of the two values.

What we can say from this, more or less, is that drug bust sites see statistically significantly more ODs than they would if there was no correlation between drug busts and ODs. That relationship holds at all levels of distance/time for opioid seizures, but is much weaker for stimulant (i.e. cocaine, meth) seizures.

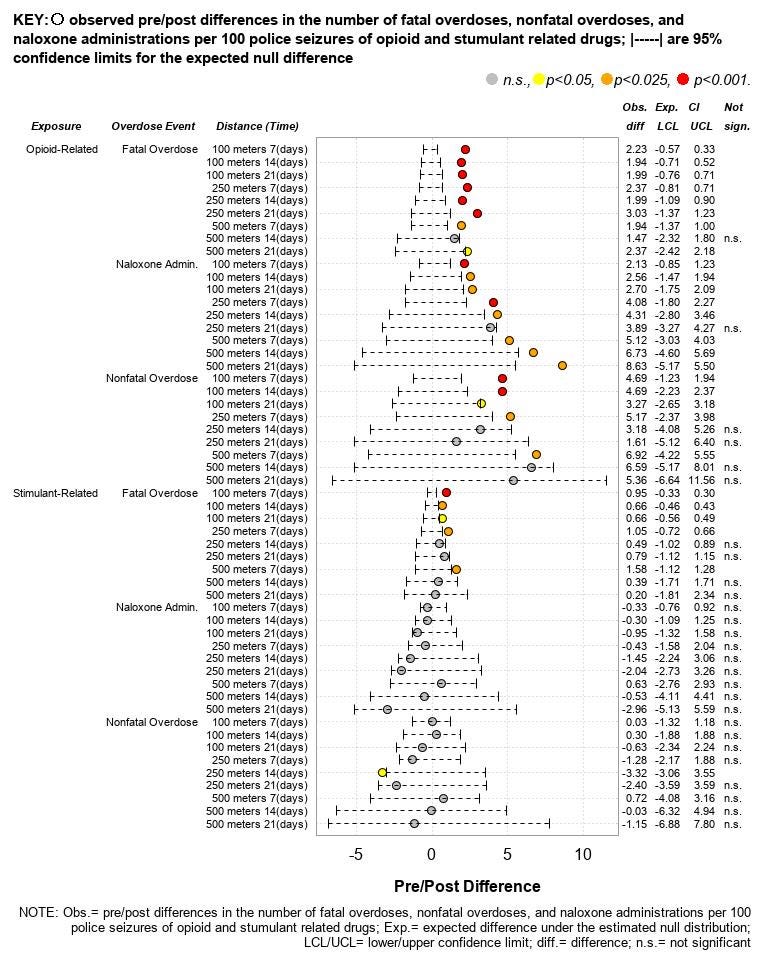

One limitation of this analysis is that it doesn’t distinguish between ODs before the seizure and after it. So the authors repeat their analysis, but this time by comparing estimated and observed pre-post differences (i.e. they count overdoses before and after the bust, and subtract the former from the latter). A similar pattern obtains: the pre-post difference in opioid ODs is elevated relative to the expected distribution, the difference in stimulants is about the same.

What we’ve observed, to distill down even further is a correlation, in time and space, between drug busts and overdoses, and in particular between drug busts and an increase in overdoses in the period following those busts, relative to the period before.

Well, Okay, Why?

Here’s the way Ray et al. explain their theory:

People can develop a tolerance for opioids, although overdose occurs when dosage exceeds tolerance to the point of respiratory failure. Unknown opioid tolerance at relapse is a documented overdose risk factor … Reductions in tolerance can occur after any involuntary disruption of an individual’s opioid supply, and accidentally ingesting a dose beyond one’s tolerance can be fatal.…

This same mechanism has been documented as occurring in the illicit drug market following disruptions from an arrested supplier and consumers contending with new and potentially unfamiliar products. The impact of these drug market disruptions may be particularly salient for people who use opioids, who can experience painful withdrawal symptoms and diminished biological tolerance even after short periods of abstinence. There is also a risk for people who knowingly use stimulants but are opioid naïve and, thus, have lower opioid tolerance; they might seek a new supplier following a drug market disruption and then overdose from fentanyl-contaminated stimulants.

In other words, drug busts cut off supply, reducing people’s tolerance. Then, when they shift to a new dealer, they take their usual dose and, due to the lowered tolerance, OD. In their op-ed, del Pozo and Victor further reference the “trustworthy drug dealer” theory:

Illicit drugs are never fully safe, of course, but many people who use drugs establish a relationship with their supplier and have some level of confidence in the quality of the drug they purchase. When an established supply line of drugs shuts down, people who use opioids face painful withdrawal. They will urgently seek out a new supply. And that batch may be unsafe.

Drug bust cuts off supply, people shift to new dealer they don’t trust, new dealer kills them.

So what we have is an association and a causal story about why the association exists. Two questions to ask here: 1) Is this story consistent with the association? 2) Is it the most persuasive story consistent with the association?

To 1, I think the answer is at best “maybe.” Think through the story being provided: a drug bust happens, creating an interruption in supply and leading to uncertainty about tolerance/purity of product, in turn producing overdoses. What this story doesn’t explain is: why would those new overdoses be in the same place as the original drug bust? Why, in particular, should the biggest effects be closest to the site of the overdose?

I don’t see a reason this should follow. 100 m is about 300 feet, which is roughly the length of a city block. Sure, the bust could lead to a disruption in supply. But why would people then start overdosing within a block of the bust, as opposed to obtaining their drugs somewhere else, even another block over? Indeed, if cops have done a drug bust in an area, it seems likely that drug dealing activity would be displaced to a different, less hot area — you’d expect larger, not smaller, effects further away from the bust site! So at the very least, there is reason to question the consistency of the story with the association.

To 2, let’s go back to what Ray et al. actually tell us. Think about what correlations, spatiotemporal or otherwise, mean. Let’s say you know that X and Y are correlated. To the extent that this implies any causal relationship, it gives us one of three options: X causes Y; Y causes X; or some unobserved variable Z causes both X and Y.3

Ray et al. think that drug busts cause overdoses. But even if their evidence is consistent with that theory, it’s also consistent with overdoses causing drug busts, or some unobserved variable causing both. And it is very easy to tell a story where, for example, a surge in ODs in a certain area attracts increased police attention, meaning that the ODs cause the busts. Or we could say that increased dealing activity causes both the busts and the ODs.

Ray et al., of course, are not just presenting a simple cross-sectional correlation. Recall that they create a counterfactual range of “expected” observations, by holding the location and timing of overdoses constant and randomly varying the timing of the bust. But this just establishes that overdoses co-occur with drug busts more than we would expect if they were totally uncorrelated with each other. It does not establish that drug busts cause overdoses.

More consistent with the Ray et al. story is the pre-post analysis. The count of proximate overdoses at t > 0 is greater than the count of overdoses at t < 0, and the observed difference between those two is greater than the expected difference recovered by the same random resampling approach previously described.

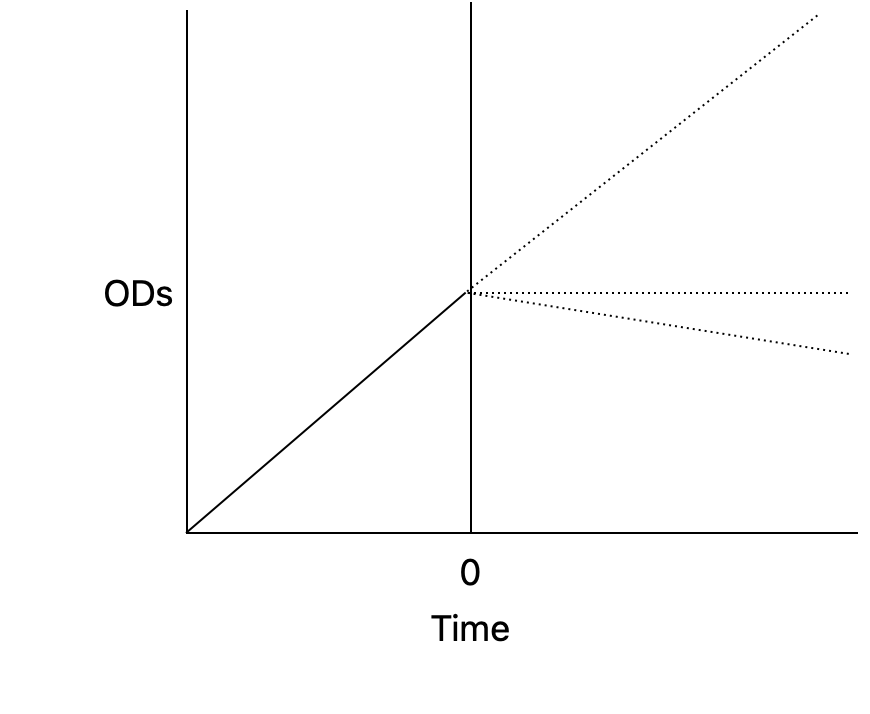

This is consistent with busts causing overdoses. But it is also consistent with overdoses rising before the bust, and continuing to rise, plateauing, or not returning to the pre-bust level. Look at the image above: the number of overdoses from the beginning of the period of observation to the intervention (t = 0) is the area under the solid line. The number of overdoses following the intervention to the end of the period of observation is the area under any of the dotted lines. All of those values would be greater than the ODs at t < 0. But they would also be consistent with a story in which ODs start to rise, the cops notice, they do a bust, and ODs simply continue to rise at the same rate, or plateau, or decline. The overdoses cause the bust (or criminal behaviors causes both the busts and the overdoses). But because the total number of overdoses in the post period are cumulatively greater, we still get more than expected.

You might think this story isn’t proved by the data, and that’s fine. I don’t need to convince you that the alternative version is the definitive truth, only that it’s at least as persuasive an explanation for the association as Ray et al.’s is. If my explanation is as persuasive, or more persuasive, than theirs—if it is at least as consistent with the evidence as their is—then we should be wary of accepting their claimed causal story as following from the evidence.

Can I Believe Vs. Must I Believe

The argument that I made above is, I think, sort of a sour grapes argument. Pointing out that data can be interpreted in an equally plausible way from the initial causal story is not research or analysis, it’s just criticizing other people’s work. And while I think I am correct that the study is perfectly consistent with stories other than “drug busts cause ODs,” it often irritates me to see this sort of argument in the wild. Go do the analysis yourself!4

But I think in this case, the argument is worth making. And it is worth making because of the way in which, as I noted at the start of this post, the study is being used.

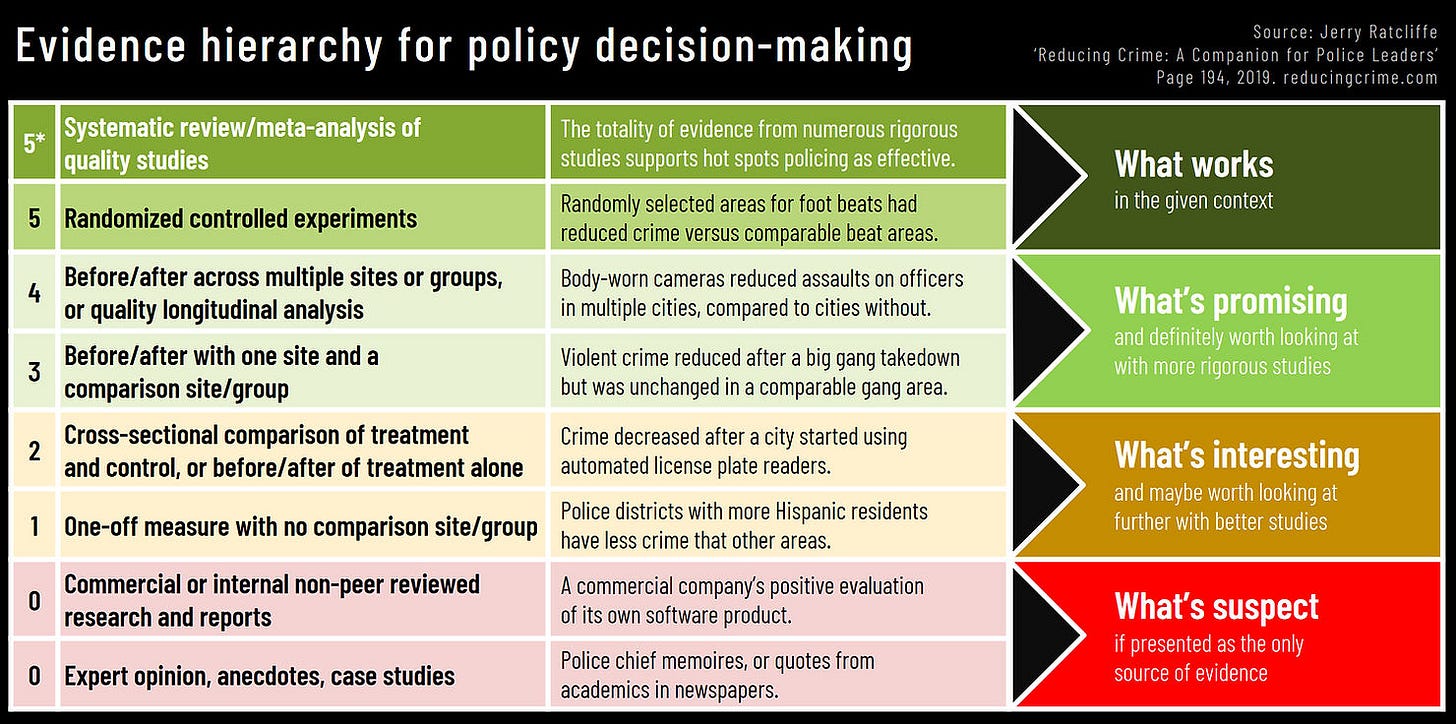

Everyone is in favor of evidence-based policy-making. But what does that actually mean? Approximately, it means that policy should be guided by what the evidence tells us is true. But how do we know that a study establishes something as truth? The short answer is we never know for sure—all studies can be subjected to data quality or researcher DF critiques, critiques as to replicability5 and external validity, etc.—but some studies are more plausibly telling us about the world than other. Studies which have a control group are better than those that don’t; studies which randomize are better than those that don’t; surveys of high-quality studies are the best. (I like the hierarchy above, from Temple’s Jerry Ratcliffe, for explaining this.)

I don’t actually think you need a survey of RCTs before you make policy change. That’s never going to happen. But I do think that a principle of humility applies: the strength of the policy conclusion you should be willing to draw from a study needs to be proportional to the quality of the evidence. Yes, sure, all I have really argued is that the study can be plausibly interpreted several ways, only one of which the authors prefer. But is it proportional to infer from an ambiguous study that should we end drug interdiction and decriminalize/legalize?

Indeed, the paper itself avoids such sweeping policy conclusions! Instead, Ray et al. write:

Officers might also use the considerable discretion at their disposal when interacting with persons who use drugs, particularly in enforcing misdemeanors or nonviolent felonies that regulate drugs to reduce harms that might come from disrupting an individual’s drug supply. Additionally, our study suggests that information on drug seizures may provide a touch point that is further upstream than other post overdose events, providing greater potential to mitigate harms. For example, although the role of law enforcement in overdose remains a topic of debate, public safety partnerships could entail timely notice of interdiction events to agencies that provide overdose prevention services, outreach, and referral to care.

“You should be careful about enforcement, and make sure to provide treatment after you do a bust!” is very different from “time to start doing safe supply!” And this seems like an obviously more proportional position, given the strength of the evidence.

Here, I want to make a larger point, about how evidence gets used in the policy-making process. There’s a distinction in policy evaluation I like to attribute to my friend the UCLA sociologist Gabriel Rossman, the distinction between “can I believe” vs. “must I believe.”6 Here’s Rossman, in context talking about the touchy question of control variables vs. mechanisms, but explaining the distinction:

In practice, theoretical issues are often political issues and so control versus mechanism is yet another instance of our old friend, that depending on how we feel normatively about a factual premise our epistemological standard shifts from “can we believe it” to “must we believe it.” If you find a zero-order association consistent with your worldview, then anything that threatens to explain it away is a mechanism. And if you find a zero-order association inconvenient for your worldview, then anything that promises to explain it away is a control.

Relatedly, if you find some evidence that could plausibly confirm your worldview (“can I believe it?”), you will treat it very differently than if it disconfirms your worldview (“must I believe it?”).7 This is a powerful tendency, and it comes up all the time in the drug policy debate. The same people who are now touting Ray et al. as indisputable evidence that we should end the war on drugs are also happy to tear to pieces—often quite viciously—studies which do not conform to their priors. And, in fairness, so too are people on my side, though it’s harder for me to identify it, if for no other reason than there are many fewer of us on Twitter.

Drug policy, I submit, is particularly problematic on this front, because so much of the evidence is of such poor quality. This is not a dig at Ray et al., which is by the Ratcliffe scale above somewhere between a 2 and a 3.8 That puts it miles ahead of most other research in this space! But the point is more general: this tendency to filter on our policy preferences will lead us to draw conclusions unmerited by the evidence.

Anyway, here’s David Card:

I feel like I should briefly disclaim that del Pozo and I have met a couple of times. He’s always struck me as a thoughtful and reasonable guy. and he did some good things when he was top cop in Burlington. I just—respectfully—think he’s claiming way too much here, and it’s my job to take him to task. Brandon, if you’re reading this, don’t take it personally!

It’s got nine coauthors. Can anyone explain to me why public health papers require *so many* coauthors? I’ve never gotten it.

Or X causes Y and Y causes X. Or variables A, B, and C cause X and Y. Or … look, I’m oversimplifying, but you get the point.

Actually, Ray et al.’s approach is particular frustrating to me because I suspect the data would let you get at causal questions if they were analyzed in a different way. Cluster OD deaths at the census block and/or census block group level; make your drug busts the treatment; then do a rolling diff-in-diff with count of ODs and treated vs. untreated blocks, or treated vs. not-yet-treated blocks. You have to do some funny stuff to account for rare events, but that’s totally doable.

I suspect the reason the authors did not do this is because they are public health scholars, and the econometric tools that I am talking about are underutilized in public health. Which is silly! And a major reason public health people get mad when econometricians use them and come up with counterintuitive results. But hey, Ray et al., if you want to share your data, I’m happy to take a shot at it!

This applies to Ray et al., by the way. I don’t want to take the space to talk about it, but if your theory is that we should end drug enforcement because a study of one county in Indiana found a spatiotemporal association, I do not think your approach passes muster.

Rossman isn’t sure if he actually coined it, but I got it from him, so I’m giving him credit.

“But Charles,” you say smugly, “aren’t you asking ‘must I believe it?’ of this study?” And the answer is yes, and you’re welcome for doing it for you when nobody else bothered to.

The synthetic counterfactual range isn’t really a control group, so it’s not really 3, but it’s better than 2 too.

Rossman presumably cribbed it from Haidt, it's location 1623 on the Kindle edition of Righteous Minds, who in turn cites Tom Gilovich (Gilovich 1991 Pg 84)